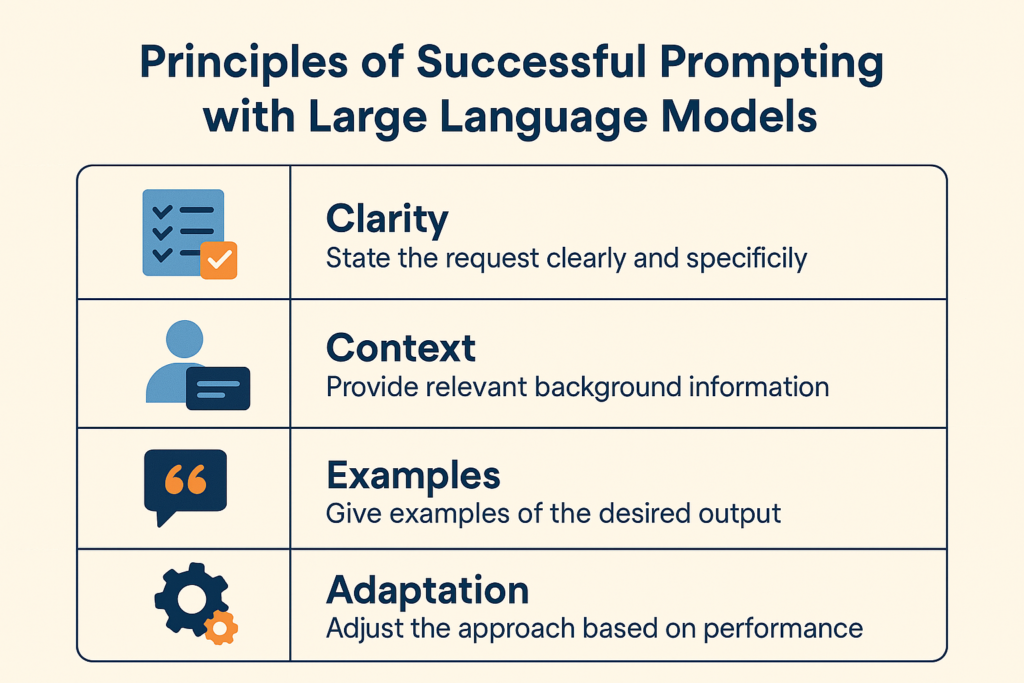

Crafting the right prompt is both an art and a science. Large language models are incredibly powerful, but they aren’t mind readers – the quality of their output heavily depends on how we ask our questions (26 Prompting Principles for Optimal LLM Output). In fact, the way a task or question is articulated can significantly influence the model’s interpretation and the quality of the response. Prompting an LLM is essentially the art of precise communication (Best Prompt Techniques for Best LLM Responses | by Jules S. Damji | The Modern Scientist | Medium). A poorly worded prompt might yield a generic or incorrect answer, while a well-crafted one can unlock detailed, accurate, and useful responses. This is especially important for everyday uses of LLMs, from writing a creative story or answering a customer query, to teaching a concept or simply retrieving information. The challenge (and opportunity) for users is learning how to “talk to” these models effectively to get the best results.

This article presents a comprehensive framework – a set of principles and stages – for successful prompting with LLMs. We’ll break down the process into clear steps: defining your goal, building context, ensuring clarity, guiding tone and style, iterative refinement, and more. Along the way, we provide examples (non-coding) for use cases like creative writing, customer support, education, and general Q&A. We also include tips on optimizing prompts for the right tone, accuracy, completeness, and safety, as well as common pitfalls to avoid. By the end, you’ll have an expert-level understanding of how to maximize the value of LLMs for everyday productivity, creativity, and communication.

1. Define Your Goal and Audience

The first step to an effective prompt is knowing exactly what you want to achieve and who the answer is for. Before you start typing, take a moment to clarify your goal: Are you looking for a factual explanation, a creative story, a step-by-step guide, or an opinion? Having a clear objective in mind will shape how you phrase the prompt (12 prompt engineering best practices and tips | TechTarget). Equally important is identifying the target audience or perspective for the response – this ensures the answer is tailored with the appropriate level of detail, complexity, and tone (26 Prompting Principles for Optimal LLM Output).

For example, suppose you want an explanation of a technical topic. Simply asking “Explain machine learning.” might yield a generic answer. But if you specify the audience, you guide the complexity of the response. Consider these two prompts:

- “Explain the concept of machine learning to an expert in data science.”

- “Explain the concept of machine learning to a high school student.”

Both prompts ask about machine learning, but the expected depth and language are very different. In the first case, the model should use advanced terminology and assume prior knowledge; in the second, it should keep the explanation basic and approachable. Indeed, adding detail about the target reader can dramatically change the output: “Explain the three laws of thermodynamics for third-grade students” will produce a much simpler, shorter answer than “Explain the three laws of thermodynamics for Ph.D.-level physicists.” (12 prompt engineering best practices and tips | TechTarget). By defining who the information is intended for (yourself or someone else, and at what knowledge level), you help the LLM format the answer appropriately.

Tips for this stage: Before prompting, decide on the outcome you want and, if relevant, state it in the prompt. Be explicit about any role or perspective (“a beginner’s guide”, “for an expert reader”, “as a friendly adviser to a customer”, etc.). This initial clarity will set the stage for everything that follows.

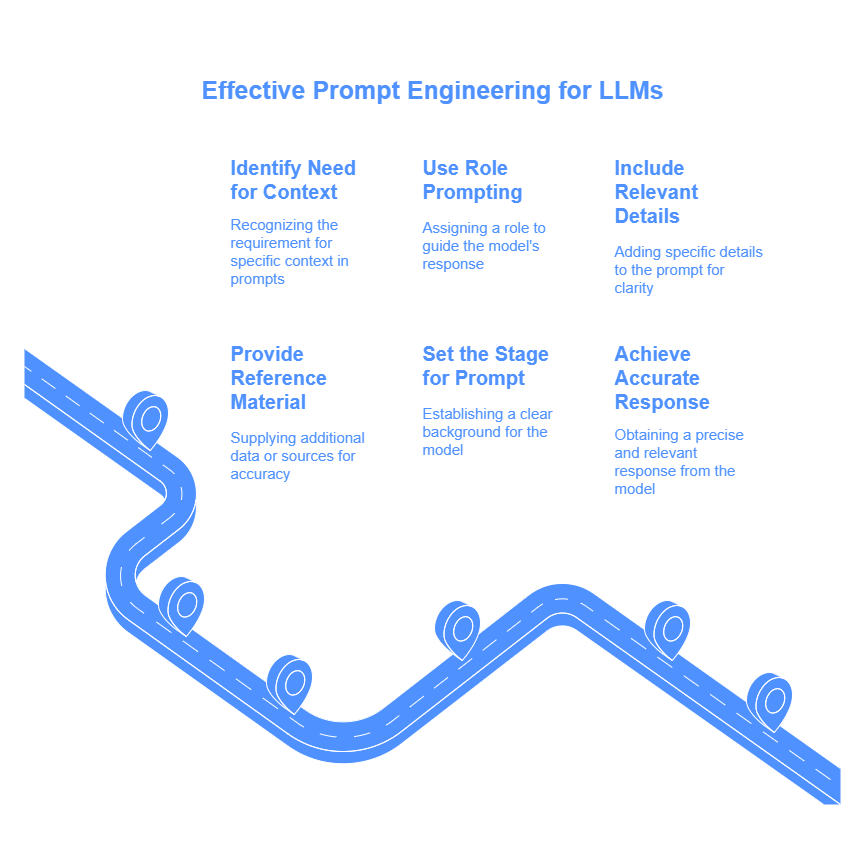

2. Provide Context and Background Information

LLMs don’t have awareness of your specific situation or what’s in your head – they only know what you tell them in the prompt (plus their general trained knowledge). That’s why providing adequate context is key. If your query is about a particular scenario or requires certain background facts, include those details upfront. The goal is to supply any information that will guide the model’s response in the right direction (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists).

One powerful way to provide context is through role prompting (persona-based context). This means telling the model to adopt a certain role or point of view relevant to your task (Role Prompting: Guide LLMs with Persona-Based Tasks). For instance, you might begin a prompt with “You are a helpful customer support agent…” or “Act as a history professor…”. Assigning a persona guides the model’s style, tone, and focus in its answer, aligning it with the role’s perspective (Role Prompting: Guide LLMs with Persona-Based Tasks). It can enhance clarity and even accuracy by making the response more context-specific. For example, if you want advice on a financial issue, a prompt that starts with “You are a financial advisor trained to give prudent, conservative advice.” sets a clear context and expectation for the kind of answer that will follow.

Include any relevant details the model would need to know. If you’re asking for help with a task, describe the situation. For example, instead of asking “What should I say to apologize to a customer?”, add context: “You are a customer service assistant. A customer is upset because their package arrived late. How should I respond in an email to apologize and make it right?” Here, we’ve given the model a role (customer service assistant) and the key facts (package was late, customer is upset), which will lead to a much more targeted and useful answer than a generic prompt. Similarly, for a creative writing prompt, setting context might mean describing the setting or characters: “Tell a short story set in medieval times where a knight must make a moral choice…”. These details prime the model with the scenario you have in mind.

Another aspect of context is providing reference material or data if available. LLMs have general knowledge up to their training cutoff, but they can still “hallucinate” (make up facts) when specifics are needed (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). If you have a source or snippet of text that is important for answering your question, include it. For instance, you might supply a paragraph from an article and ask the model to answer a question based on that text. OpenAI’s guidance notes that giving the model reference text can lead to more accurate and reliable answers (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). Always let the model know the information you expect it to use rather than hoping it will pull the right detail from memory.

In summary, set the stage for your prompt. Give the model the background it needs (facts, context, or role) so it isn’t guessing the scenario. The more relevant context you provide, the more grounded and on-point the response will be.

3. Be Clear and Specific in Your Instructions

Clarity is the golden rule of prompting. When telling the model what you want, say it in clear, specific terms – don’t make it decipher vague requests or fill in blanks. Ambiguous or overly general prompts often yield off-target or generic responses (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). To get useful results, you should describe exactly what output you need, and include any crucial details (the who, what, when, where, how) directly in your question (12 prompt engineering best practices and tips | TechTarget).

One common mistake is not providing enough detail. For example, “What time is high tide?” is an ineffective prompt because it’s missing key information. High tide where? On what date? Tides vary by location and day, so the model cannot give a correct answer without those details (12 prompt engineering best practices and tips | TechTarget). A much better prompt would be: “What times are high tides in Gloucester Harbor, Massachusetts, on March 31, 2025?” (12 prompt engineering best practices and tips | TechTarget). In this improved version, we’ve specified the location and date, so the model has a clear question to answer. Always ask yourself if your prompt could be interpreted in multiple ways – if so, refine it to eliminate that ambiguity.

Be explicit about the task. If you want a summary, say “summarize”; if you need an analysis, say “analyze”; if you’re looking for suggestions, say “list suggestions for…”. Using strong action verbs and direct language helps the model understand your intent (Common AI Prompt Mistakes and How to Fix Them – AI Tools) (Common AI Prompt Mistakes and How to Fix Them – AI Tools). For instance, instead of “I need information on improving my resume,” you might say “Analyze my resume and suggest five ways to improve it for a marketing job application.” This prompt clearly states the actions required (analyze and suggest) and even quantifies the expected number of suggestions.

It’s also important to avoid giving conflicting or confusing instructions. Sometimes in an attempt to cover all bases, a prompt can include contradictory terms or mixed objectives. For example, asking for “a detailed summary” or saying “keep it brief but also include all the important information” sends mixed signals – do you want it detailed, or brief? Such prompts can confuse the model (12 prompt engineering best practices and tips | TechTarget). The best prompts stick to a single clear goal or consistent set of instructions (Common AI Prompt Mistakes and How to Fix Them – AI Tools). If you have multiple goals, consider breaking them into separate prompts or stages (see next section on iteration). Likewise, phrase requests in the positive whenever possible (tell the model what to do rather than what not to do). Studies have found that “Do say ‘do,’ and don’t say ‘don’t’” – in other words, instructing the model with positive directives is more effective than negative commands (12 prompt engineering best practices and tips | TechTarget). For instance, instead of writing “Don’t omit any important facts,” rephrase as “Include all relevant important facts.” This reduces the chance of the model ignoring or misinterpreting a negated instruction (12 prompt engineering best practices and tips | TechTarget).

To recap, clarity and specificity are crucial. State your request unambiguously, include necessary specifics (like names, dates, domains, etc.), and ensure your prompt isn’t trying to do too much at once. A precisely worded prompt gives the LLM far less room to guess your intent – and you’ll get a response that much closer to what you envisioned.

4. Specify the Desired Tone and Style

LLMs are capable of producing text in a wide range of tones and styles. They can be formal or casual, technical or whimsical, empathetic or matter-of-fact – it all depends on how you ask. By default, a model might respond in a neutral, explanatory tone. If that’s not what you want, you should tell the model what tone or style to adopt. Including explicit instructions about tone leads to more consistent and on-target results (10 Examples of Tone-Adjusted Prompts for LLMs).

Think about the context and purpose of your request: Should the answer sound professional and respectful, or friendly and conversational? Are you expecting a dry factual explanation, or something creative and entertaining? Add those cues into your prompt. For example, if you’re drafting a customer support email, you might prompt: “Write a professional email to a client explaining the issue with their order. Use a polite and empathetic tone throughout, and ensure the message is clear and reassuring.” In fact, a prompt from a style guide suggests: “Write a professional email to a client explaining the benefits of our new product. Use a formal and respectful tone, ensuring the email is clear and concise.” (10 Examples of Tone-Adjusted Prompts for LLMs). This explicitly instructs the model to keep the tone formal and respectful, appropriate for a business context. On the other hand, if you were creating a social media post or a fun blog, you might say “use a casual, upbeat tone” or “make it humorous”.

You can also evoke style by referencing a particular voice or genre. For creative tasks, it helps to specify the style you’re aiming for, such as “in the style of a fairy tale” or “with the suspenseful tone of a thriller novel.” For instance: “Tell a story about a detective solving a mystery, in a noir style with a serious, suspenseful tone.” This signals the vibe you’re going for (moody and noir) which the model will attempt to emulate. If you want a comparison, consider how different these prompts sound:

- “Explain quantum computing.” (no style specified – likely a straightforward explanation)

- “Explain quantum computing in simple terms, as if I’m 11 years old, with a friendly tone and an analogy.” (now the model will aim for an accessible, friendly style with analogies to help a kid understand)

Notice that tone can be influenced by how you frame the context as well (an 11-year-old audience implies a simple, friendly tone). But it never hurts to be explicit. Phrases like “in a courteous manner,” “with enthusiasm,” “in a neutral, factual tone,” etc., act as direct cues. In prompt engineering guides, it’s noted that explicitly stating the desired tone – whether formal, casual, technical, or humorous – leads to more consistent and accurate style in outputs (10 Examples of Tone-Adjusted Prompts for LLMs). Also, make sure the tone matches your target audience and purpose. A learning context might need a patient, encouraging tone, while a policy brief would need an objective, authoritative tone. Matching the tone to the audience’s expectations keeps the response appropriate (10 Examples of Tone-Adjusted Prompts for LLMs).

In summary, don’t leave the tone to chance. If the manner of the response is important to you, say so in your prompt. This level of guidance ensures the LLM’s answer not only has the right content, but also feels right in style and voice for your needs.

5. Outline the Desired Output Format

In addition to what the model says, you often have preferences for how the answer is organized or formatted. Prompting allows you to guide the structure of the output as well. If you have a desired format – be it a list, a table, an essay, bullet points, a dialogue, etc. – make that clear in your instructions. Providing a template or explicitly stating the format can greatly increase your satisfaction with the result (Common AI Prompt Mistakes and How to Fix Them – AI Tools).

Sometimes a subtle change in the prompt can alter the output structure significantly. For example, compare:

- “Summarize the meeting notes.”

- “Summarize the meeting notes in a single paragraph, then list the key points discussed by each speaker.” (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists)

The second prompt tells the model exactly how to format the summary: first as a paragraph, and then as a list of key points for each speaker. This level of instruction yields a more organized response. In OpenAI’s own testing, they found that adding such specific formatting instructions to a prompt leads to more useful outputs (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). Always consider if your query would be best answered as a step-by-step list, a set of examples, a short answer or a long-form explanation, etc., and then phrase the prompt to request that structure.

Techniques for specifying format include:

- Enumerated or Bulleted lists: If you want an answer in list form, say “List X reasons…”, “Give me 3 examples of…”, or “Provide a bullet-point list of pros and cons for…”.

- Sections or Headings: For a more complex response, you can instruct the model to break it into sections. E.g., “Provide a report with an introduction, three main points (with subheadings), and a conclusion.” The model will attempt to organize the output accordingly.

- Templates: You can even include a short template or example format in your prompt. For instance: “Generate a product description in this format:\nName: …\nFeatures: …\nBenefits: …”. By giving a pattern to follow (as in the example product description format), you ensure the output is structured the way you want (Common AI Prompt Mistakes and How to Fix Them – AI Tools) (Common AI Prompt Mistakes and How to Fix Them – AI Tools).

- Length or detail level: If you have a length in mind, you can mention that too (though LLMs can’t count words exactly, they can approximate). For example, “Write a one-paragraph answer,” or “Give a brief summary (2-3 sentences) followed by a detailed explanation (5-6 sentences).” Setting an expected length or level of detail is part of formatting the output. Do note that models may not always hit an exact length, but they will attempt to follow guidance like “concise” vs “detailed” (12 prompt engineering best practices and tips | TechTarget) (12 prompt engineering best practices and tips | TechTarget).

By outlining the output format, you essentially give the model a mini “answer blueprint.” This reduces ambiguity about what the final answer should look like. If you don’t specify format, the model will choose what it thinks is best, which might not be what you had in mind. So, if format matters, include those instructions. For instance, when asking for a timeline, say “present the timeline as a list of dates and events,” or if you need an answer in a table, describe the table columns you want.

Example: You might prompt, “Compare renewable energy sources. Present the answer in a table with columns for Source, Advantages, Disadvantages, and Typical Usage.” By doing so, you’re likely to get a neatly structured table in the response, because the model knows exactly how to organize the information.

In short, take advantage of the fact that you can shape not just the content but also the format of the LLM’s response through your prompt. When content is delivered in a clear format that suits your needs, it becomes far more usable.

6. Refine and Iterate for Improvement

Even with all the above guidelines, you might not get the perfect answer on the first try – and that’s okay. Successful prompting is often an iterative process. Think of your initial prompt as a first draft. Once the model responds, you should review the output and be ready to refine your prompt or ask follow-up questions to improve the result (Iterative Prompt Refinement: Step-by-Step Guide). Iteration is a normal part of working with AI: you are essentially collaborating with the model, steering it gradually toward what you need.

Here is an effective process for iterative prompt refinement (Iterative Prompt Refinement: Step-by-Step Guide):

- Start with a clear initial prompt. Apply the principles above (goal, context, clarity, etc.) to make your best first attempt.

- Review the output carefully. Check if the answer actually solved your query. Is it accurate and on-topic? Is it detailed enough (or maybe too detailed)? Did it follow the requested format and tone? Look for any errors, omissions, or parts that seem off. This step is about identifying gaps between what you wanted and what you got (Iterative Prompt Refinement: Step-by-Step Guide).

- Refine your prompt based on the feedback. Once you see how the model interpreted your request, you can adjust your next prompt to address any issues. Maybe you realize you need to specify “in 200 words or less” because the first answer was too long, or you need to add “avoid technical jargon” because the answer was too complex. You might add constraints, clarify a term, or include an example to guide the model (Iterative Prompt Refinement: Step-by-Step Guide). Essentially, feed the model more guidance to fix what was missing.

- Test the new prompt and repeat if necessary. Ask the question again with your refined prompt (or ask a follow-up in the same conversation to tweak the response). Compare the new output with the previous one – is it closer to what you wanted? If it’s improved but not yet ideal, you can iterate again, each time honing in on the desired result (Iterative Prompt Refinement: Step-by-Step Guide). A couple of rounds of prompt tweaking often yields a significantly better answer than the first try.

For example, imagine you asked for a travel itinerary and the result was too generic. You might follow up with, “That’s a good start. Can you add specific hotel and restaurant recommendations in the itinerary?” The model can then refine its answer. Or if the tone of a draft letter is not quite right, you can say, “Please rewrite the above response in a more formal tone and add a thank-you note at the end.” This iterative dialogue lets you zero in on the perfect output.

Why iterate? Because it aligns the results more closely with your goals and catches errors early (Iterative Prompt Refinement: Step-by-Step Guide). By reviewing and refining, you’re essentially training the AI on-the-fly to meet your needs for that query. Iteration can lead to better accuracy, completeness, and overall quality. It’s often far more effective to make incremental improvements like this than to try to craft a one-shot “perfect” prompt from scratch. In fact, not spending enough time refining prompts is a common mistake – rushed prompts often result in mediocre outputs (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). Taking a bit of time to polish your prompt through trial and error can pay off in a much better answer.

Remember, you can also use multi-turn conversations strategically. If the model’s first answer is missing information, you can ask a follow-up question in the same chat to delve deeper. The model will remember what it just told you, so you can focus on what needs improvement. For instance: “Can you elaborate on the second point further?” or “Give me more examples for that explanation.” This is a form of iterative prompting within a single conversation thread.

In summary, don’t be afraid to iterate. Treat your prompt and the model’s answer as part of a feedback loop. Each iteration is an opportunity to clarify and guide the model closer to the desired output. Expert prompters often go through several revisions – it’s a normal and productive part of the process.

Fine-Tuning for Tone, Accuracy, Completeness, and Safety

Beyond the general principles above, there are a few specific areas that deserve extra attention: tone, accuracy, completeness, and safety. Optimizing your prompts with these in mind can help ensure the output is not only useful, but also appropriate and reliable.

- Tone and Style: We covered this in depth earlier, but it’s worth reiterating. Always double-check if the tone of the model’s response matches your intent. If it doesn’t, you likely need to adjust your prompt. For example, if you got a response that was too curt and you wanted a warmer touch, add something like “Respond in a friendly, caring tone.” Tone cues can be subtle (e.g. using “please” can soften the reply) but for consistent results, be explicit (10 Examples of Tone-Adjusted Prompts for LLMs). Also, ensure the style fits the medium – a text message can be informal, while a business report should be formal. If the initial answer’s style is off, don’t hesitate to say “Rewrite this more formally” or “Make it sound more enthusiastic and upbeat.” A well-tuned tone makes the content more effective for its purpose.

- Accuracy: LLMs sound confident, but they do not actually verify facts. That means they might occasionally produce incorrect information or outright fabrications (often called “hallucinations”). To combat this, consider prompts that encourage accuracy. If your question is factual, you can prompt “If you are not sure of a fact, say so,” or “Cite the source if possible.” Even better, as mentioned, provide factual context or data for the model to work from (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). For instance, “Using the information in the following paragraph [insert text], answer the question…”. By grounding the model in real data, you get more reliable answers. After receiving an answer, verify important details independently if the information is critical. When it comes to calculations or specific data, sometimes asking the model to show its steps (e.g. “Explain your reasoning”) can reduce errors (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). Always remember that the AI may not be 100% correct – a critical eye from you is still needed for high-stakes queries.

- Completeness: Sometimes a model’s answer, while correct, may not be comprehensive. It might answer part of your question but omit other parts. To get a complete answer, make sure your prompt explicitly asks for all the aspects you want covered. If you need a multi-faceted response, structure your prompt to mention those facets (for example: “Explain the benefits and the potential risks of X”, or “Provide at least three distinct reasons….”). If the answer still comes back incomplete, use iteration: “This is good, but please also address Y which you didn’t mention.” You can also directly prompt for thoroughness: “Give a comprehensive answer covering all major points on this topic.” The model will attempt to be exhaustive. Another trick is to have the model list items: if you ask “What are the top 5 causes of…?”, it will be inclined to produce that number of points, giving you more breadth by design. Reviewing the output for completeness is part of the iteration step – ensure every part of your question got answered, and if not, prompt again for the missing pieces.

- Safety and Appropriateness: Large language models are trained not only to provide helpful answers, but also to avoid harmful or disallowed content. As a user, you should be mindful to prompt responsibly. If your request inadvertently sounds like it’s asking for something against usage policies (e.g., advice on illicit activities or hateful content), the model may refuse or give a very generic safe response. To get useful answers on sensitive topics, frame questions neutrally and academically. For example, instead of a loaded or extreme phrasing, ask “What are the health risks of smoking according to medical research?” rather than “Why is smoking awesome?”. You can also guide the model to maintain a neutral or unbiased stance. Adding a line like “Ensure that your answer is unbiased and does not rely on stereotypes.” can be effective (26 Prompting Principles for Optimal LLM Output). This tells the model you expect a fair and safe reply. If you need the model to discuss a sensitive issue (maybe in an educational context), you can preface with something like “Respond in a respectful, academic manner.” On the flip side, if the model ever gives an output that seems inappropriate or biased, consider that a failure of the prompt to specify guidelines – you can correct it by saying, for example, “Please restate that without any derogatory language or bias.” Remember, you are in control of the conversation’s direction. By setting the right tone and boundaries in your prompt, you encourage the AI to stay within safe and acceptable lanes. Finally, never try to force the model to break rules (so-called “jailbreak” prompts); not only is it unethical, but it will likely be ineffective with modern AI safeguards. Keep your requests constructive and within reasonable bounds, and the model will serve you much better.

By fine-tuning these aspects of your prompts, you elevate the quality of the AI’s responses. An ideal prompt not only asks for the right content, but does so in a way that the tone is suitable, the facts are likely to be correct, the answer is complete, and the output avoids any problematic content. It may seem like a lot to consider at first, but with practice these become second nature. You’ll start to internalize these checks: “Did I specify the tone? Is there any ambiguity? Maybe I should add a line to ensure it’s unbiased.” Taking a moment to run through this mental checklist can make a noticeable difference in the results you get.

Common Pitfalls to Avoid

Even experienced users can stumble into some common prompt pitfalls. Here are a few mistakes to watch out for, and how to avoid them:

- Vague or General Prompts: As discussed, if your prompt is too broad or nonspecific, the model may respond with something equally broad (and not very useful). Leaving out important details like the target audience, tone, or purpose is a frequent mistake (Common AI Prompt Mistakes and How to Fix Them – AI Tools). Always add specifics to focus the response. For example, instead of “Tell me about history,” narrow it down: “Give me an overview of the causes of the American Civil War.” Clarity will guide the AI to a better answer (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium).

- Overly Complex or Run-On Prompts: Long, convoluted sentences with multiple requests can confuse the AI. If you ask for too many things at once (e.g. posing a compound question that touches on different topics), the model might miss some or produce a jumbled answer. It’s better to keep prompts digestible (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). If necessary, break a complex task into steps or separate prompts. For instance, ask one question at a time or number your requirements (“First do X. Then do Y.”). Simpler, well-structured prompts are easier for the model to follow (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium).

- Lack of Context or Background: Providing too little context is like asking someone to solve a puzzle with missing pieces (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). If your question depends on specific background, not giving that information is a pitfall. Don’t assume the model knows exactly what you’re referring to – always include any necessary background facts or situational context. For example, “What should I pack?” is unclear (pack for what?), whereas “I’m going on a week-long hiking trip in the mountains; what should I pack?” gives context that yields a useful packing list.

- Multiple Objectives in One Prompt: Avoid asking the model to do unrelated tasks in a single prompt (e.g. “Explain quantum computing and write a poem about it”). This can lead to disjointed or incomplete results (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). Each prompt should ideally have one clear objective. If you have two distinct goals, handle them in separate interactions or clearly separate the instructions (and be prepared for a lengthy answer if you bundle them). A focused prompt makes it easier for the AI to stay on track.

- Conflicting Instructions: This happens when you accidentally include terms that clash (such as telling the model to be “brief” and “include all details” in the same prompt). The AI might prioritize one and ignore the other, or get “confused” about what you really want (12 prompt engineering best practices and tips | TechTarget). The fix is to review your prompt for any internal contradictions or mixed messages. Make sure all parts of your request align. If you truly have a trade-off (like brevity vs detail), decide which to prioritize or find a middle ground to explicitly ask for (e.g. “summarize key points concisely in two paragraphs”).

- Unrealistic Expectations: Remember that while LLMs are advanced, they have limitations. Don’t expect them to have up-to-the-minute information beyond their training data (unless connected to a live knowledge source), and don’t expect precise word-for-word outputs or perfect math every time. For instance, asking for “the latest stock price of XYZ” from an offline model will fail. Or asking it to “write exactly 100 words” might yield approximately that length but rarely exact. Know the tool’s limits – if you push a capability it doesn’t have, you’ll be disappointed (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). Instead, work within what the model can do (or use a different tool for tasks like live data retrieval or calculations if necessary).

- Not Iterating or Rushing the Prompt: Sometimes users take the first answer they get, even if it’s not great, or they shoot off a quick prompt without much thought. This often leads to subpar results. Avoid the urge to one-and-done if the answer isn’t sufficient. As we emphasized, take time to refine your prompt and try again (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). Iteration is your friend; use it rather than assuming the model “just can’t do better.” Often a small tweak in wording or added detail can dramatically improve the output.

- Ignoring Tone/Style Requirements: Failing to specify a needed tone or style is a pitfall that can make the answer unusable for your purposes (Common Pitfalls to Avoid in AI Prompt Writing | by Michael Phillips | The Functional Technologist | Medium). If you have a certain format (say, a polite tone for an email, or a persuasive tone for an essay) and you don’t mention it, the model might produce something in the wrong register. Always consider tone/style as part of your prompt if it matters – otherwise you may have to rewrite the output yourself or spend extra prompts fixing it.

- Prompting for Disallowed or Harmful Content: This is a major pitfall to avoid for ethical and practical reasons. If you attempt to get the model to produce inappropriate content (hate speech, explicit violence, personal data, etc.), you will either get a refusal or worse, you might get content that violates guidelines. Pushing the AI into unsafe territory is not only against usage policies, but it also defeats the purpose of using the AI productively. Keep your prompts within acceptable use. If you’re discussing sensitive topics (medical, legal, etc.), do so objectively and for constructive reasons. This keeps the conversation productive and ensures you don’t run into the model’s safety filters unnecessarily.

Being aware of these common mistakes can save you a lot of time. When crafting your prompt, it’s a good habit to quickly self-check: Is it clear and specific? Am I giving the AI what it needs to know? Did I accidentally say something that could be misunderstood? By avoiding these pitfalls, you set yourself up for success from the get-go.

Conclusion: Mastering the Art of Prompting

Prompting is a skill, and like any skill it improves with practice. By now, you’ve seen that a successful prompt is not just a random question – it’s a carefully crafted instruction that considers goal, context, clarity, tone, format, and more. Let’s summarize the best practices we covered in this framework:

- Have a clear goal and audience in mind: Know what you want from the AI, and phrase your prompt to reflect that purpose and target reader (12 prompt engineering best practices and tips | TechTarget) (12 prompt engineering best practices and tips | TechTarget). A prompt tailored for a specific audience or outcome will yield a more relevant response.

- Provide necessary context: Always give the model the background info or situational details it needs to answer correctly (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). If relevant, set the scene or assign the model a role to guide its perspective (Role Prompting: Guide LLMs with Persona-Based Tasks).

- Be specific and unambiguous: Say exactly what you mean. Include pertinent details (who, what, where, when) in the prompt itself (12 prompt engineering best practices and tips | TechTarget). Avoid vague language and don’t assume the AI will infer things that you haven’t stated.

- Direct the tone and style: If you have preferences for how the answer should sound or the form it should take, state them. Ask for the tone (formal, casual, enthusiastic, etc.) that suits your needs (10 Examples of Tone-Adjusted Prompts for LLMs), and specify any style or format requirements (list, email, story, etc.).

- Structure the output when needed: Guide the answer format by requesting a specific structure or layout (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists). Whether it’s bullet points, sections, or an example format, giving the AI an outline helps it organize the content to your liking.

- Use iteration to refine results: Don’t hesitate to polish your prompt based on the AI’s output. Review answers for accuracy and completeness, then tweak your prompt or ask follow-up questions to fill the gaps (Iterative Prompt Refinement: Step-by-Step Guide). Iterative prompting often leads to significantly better outcomes than a single try.

- Keep it user-friendly and safe: Ensure your prompt encourages accurate and unbiased information. Provide references for factual queries and instruct the model to remain fair and respectful (26 Prompting Principles for Optimal LLM Output). Avoid pushing the model into areas that violate guidelines – you’ll get more useful answers by staying within safe, constructive topics.

By following this framework, you essentially form a mental checklist for each prompt you write. Over time, you’ll find that you naturally incorporate these elements without much effort. The payoff is big: you’ll get more useful, precise, and high-quality answers from LLMs consistently, making them a much more powerful tool for your everyday productivity and creativity.

| Prompting Principle | Explanation | Example Prompt |

|---|---|---|

| Goal & Audience Clarity | Clearly define purpose and intended audience. | “Explain climate change to a middle-school student.” |

| Provide Context | Include relevant background or assign roles. | “You are a customer support agent responding to a delayed shipment complaint.” |

| Specificity & Clarity | Avoid ambiguity; explicitly state your request. | “List three key advantages of electric vehicles over gasoline vehicles.” |

| Tone & Style Direction | Specify the tone and style for the output. | “Write a friendly and reassuring email apologizing for the inconvenience.” |

| Output Format Guidance | Describe desired structure or format clearly. | “Summarize the article in two paragraphs, then list the main points.” |

| Iterative Refinement | Review and adjust prompts for improved accuracy. | “That’s good; now add more detail on the second point mentioned.” |

| Accuracy & Completeness | Guide model toward accurate, complete responses. | “Provide a comprehensive overview of the health benefits and risks of intermittent fasting.” |

| Safety & Appropriateness | Ensure prompts encourage ethical, unbiased responses. | “Discuss cultural impacts of social media objectively, without bias.” |

In conclusion, mastering prompting can transform your interactions with AI from hit-or-miss to reliably effective. It enables you to unlock the full potential of large language models for tasks like writing, learning, problem-solving, and beyond. Remember that every conversation with an LLM is dynamic – you are in control and can guide the model to better results with the right approach. So apply these strategies, experiment, and learn from each interaction. With practice, you’ll prompt like a pro, and the AI’s responses will feel more and more like they’re exactly what you were looking for. Happy prompting!

Sources:

- Damji, J.S. “Best Prompt Techniques for Best LLM Responses.” The Modern Scientist (Medium), 2023 – emphasizes clarity, specificity, and introduces the CO-STAR prompt framework (Best Prompt Techniques for Best LLM Responses | by Jules S. Damji | The Modern Scientist | Medium) (Best Prompt Techniques for Best LLM Responses | by Jules S. Damji | The Modern Scientist | Medium).

- OpenAI. “Prompt engineering guide: Six strategies for better results.” OpenAI Documentation, 2023 – recommends clear instructions, providing reference text, and breaking down complex tasks (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists) (OpenAI’s New Guide on Prompt Engineering: Six Strategies for Better Results – Association of Data Scientists).

- TechTarget. “12 Prompt Engineering Best Practices.” 2023 – outlines tips like specifying audience, including context, and using positive language in prompts (12 prompt engineering best practices and tips | TechTarget) (12 prompt engineering best practices and tips | TechTarget).

- Latitude. “Iterative Prompt Refinement: Step-by-Step Guide.” 2025 – describes an iterative approach to prompt tuning (review output, refine prompt, repeat) for improved results (Iterative Prompt Refinement: Step-by-Step Guide).

- Cesar Miguelañez. “10 Examples of Tone-Adjusted Prompts for LLMs.” Latitude Blog, 2025 – demonstrates how explicitly adjusting tone and style in prompts yields more targeted responses (10 Examples of Tone-Adjusted Prompts for LLMs) (10 Examples of Tone-Adjusted Prompts for LLMs).

- Pareto AI. “26 Prompting Principles for LLMs.” 2024 – a comprehensive list of prompting principles, including defining the audience and encouraging unbiased responses (26 Prompting Principles for Optimal LLM Output) (26 Prompting Principles for Optimal LLM Output).

- (Additional citations embedded throughout the text)